Varrow Madness had a heavy emphasis put on hands on labs this year. We Varrowites definitely like getting our hands dirty and we wanted to give you the chance to get your hands on the products as well. We wanted to give Madness attendees a taste of both VMware View and Citrix XenDesktop. and to see the technical pieces of the provisioning process required in order to deliver a virtual desktop to your users.

Our overall vision for how the labs would run at Madness this year were built around our experiences with the hands on labs available at the major vendor conferences, such as VMworld, PEX as well as Citrix Synergy. Comparing our labs to these vendors set the bar pretty high and i think we achieved a very well developed lab. The Varrow Hosted labs for the EUC practice were not the only labs available at Varrow Madness either, attendees also had access to the full VMware Hands on Lab list hosted in the Cloud, the same revered labs that were available at VMware PEX to partners.

Varrow Labs Behind the Scenes

Dave Lawrence, Director of End User Computing at Varrow volunteered me to assist with putting the labs together several weeks before Madness. I was pretty excited about tackling this opportunity so I jumped at the chance.

I wanted to provide details on the hardware and software setup that we used to accomplish delivering twenty plus isolated environments. Maybe this will inspire you on a way that you could use vCloud Director in your environment to enable your users, maybe spinning up multiple isolated or fenced vApps for your developers, testing a new product or a temporary project, the use cases are only limited to what you can imagine 🙂

Lab Hardware

Compute

- (2) – 6120XP FI

- (2) – 2104XP IO Modules

- UCS5108 Chassis

- Blade 1 – B200 M1

- (2) X5570 – 2.933GHz

- 32GB 1333MHz RAM

- N20-AC0002 – Cisco UCS M81KR Virtual Interface Card – Network adapter – 10 Gigabit LAN, FCoE – 10GBase-KR

- Blade 2 – B200 M1

- (2) X5570 – 2.933GHz

- 32GB 1333MHz RAM

- N20-AC0002 – Cisco UCS M81KR Virtual Interface Card – Network adapter – 10 Gigabit LAN, FCoE – 10GBase-KR

- Blade 3 – B200 M2

- (2) E5649 – 2.533GHz

- 48GB 1333MHz RAM

- N20-AC0002 – Cisco UCS M81KR Virtual Interface Card – Network adapter – 10 Gigabit LAN, FCoE – 10GBase-KR

- Blade 4 – B200 M1

- (2) X5570 – 2.933GHz

- 24GB 1333MHz RAM

- N20-AQ0002 – Cisco UCS M71KR-E Emulex Converged Network Adapter (LIMITED TO TWO 10GB NICS)

- UCS5108 Chassis

- Blade 1 – B200 M1

- (2) X5570 – 2.933GHz

- 24GB 1333MHz RAM

- N20-AC0002 – Cisco UCS M81KR Virtual Interface Card – Network adapter – 10 Gigabit LAN, FCoE – 10GBase-KR

- Blade 2 – B200 M2

- (2) E5649 – 2.533GHz

- 48GB 1333MHz RAM

- N20-AC0002 – Cisco UCS M81KR Virtual Interface Card – Network adapter – 10 Gigabit LAN, FCoE – 10GBase-KR

- Blade 3 -B200 M3

- (2) E5-2630 12 Core CPUs

- 96GB 1600Mhz

- MLOM VIC

- Blade 4 -B200 M3

- (2) E5-2630 12 Core CPUs

- 96GB 1600Mhz

- MLOM VIC

Storage

- VNX5300

- (16) – 2TB 7.2K NL SAS Drives

- (5) – 100GB SSD – Configured as Fast Cache

- (25) – 600GB 10K SAS 2.5″

Networking

- N7K-M148GS-11L – 48 Port Gigabit Ethernet Module (SFP)

- N7K-M108X2-12L – 8 Port 10 Gigabit Ethernet Module with XL Option

- N7K-M132XP-12L – 32 Port 10GbE with XL Option, 80G Fabric

- N7K-M132XP-12L – 32 Port 10GbE with XL Option, 80G Fabric

- N7K-SUP1 – Supervisor Module

- N7K-SUP1 – Supervisor Module

- N7K-M108X2-12L – 8 Port 10 Gigabit Ethernet Module with XL Option

- SG300-28 – GigE Managed Small Business Switch

- DS-C9124-K9 – MDS 9124 Fabric Switch

- DS-C9148-16p-K9 – MDS 9148 Fabric Switch

- Nexus 5010

- w/ N5K-M1008 – 4GB FC Expansion Module

- Nexus 2148T Fabric Extender

- Connected to 5010

Thin Clients

Cisco provided the thin clients that were used in the labs. The thin clients were capable of RDP, ICA as well as PCoIP connections. Each thin client station connected via Remote Desktop to a NAT IP into their assigned vCenter. The thin clients were Power over ethernet drawing very little power and may be representative in what you would deploy if you were planning a thin client deployment.

vCloud Director

Each UCS blade had ESXi installed all managed by a virtualized VMware vCenter 5.1. We used vCloud Director appliance to build the vApp for each lab and created Catalogs for each lab environment that we could check out for each workstation. We also had vCenter Operations Manager deployed so that we could monitor the lab environment.

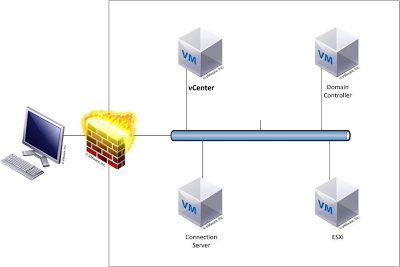

vApp Design

Each vApp or Pod in both the XenDesktop and the VMware View environment consisted of four virtual machines at the beginning. Each Pod or vApp was fenced off from the other to give each user their own isolated EUC environment. The networks on the vDistributed Switch for each vApp were dynamically built as each vApp was powered on.

VMware View

- Domain Controller

- VirtualCenter – SQL, VC 5.1 – users remote to this workstation via a NAT IP to run through the lab

- View Connection Server – View Composer

- ESXi Server – hosting desktops – Virtualized ESXi

Citrix XenDesktop

- Domain Controller

- VirtualCenter – SQL, VC 5.1 – users remote to this workstation via a NAT IP to run through the lab

- Citrix Desktop Delivery Controller, Citrix Provisioning Server

- ESXi Server – hosting desktops – Virtualized ESXi

The general script for both platforms would be to..

- Connect to vCenter from thin client via RDP

- Provision multiple desktops via product specific technology (View Composer for View and Provisioning Server for XenDesktop)

- Connect to provisioned Desktops

And the Madness Begins

We shipped and set up the server rack containing all the lab equipment as well as the workstations to the event the day before Madness. Getting a full rack shipped and powered at a site not built for it can have its challenges, luckily we have some really talented and knowledgeable people at Varrow that can navigate these issues. Madness was also the first chance we had to stress test the labs with a full user load. Later that evening we pulled all hands on deck and sent them all through the labs, there were a mix of technical and non technical Varrowites in the room pounding away at the labs. We were seeing workloads in excess of 10-15000 IOPs and thing were running pretty smooth….until I decided to start making changes by checking out the Citrix lab vApp from the vCloud catalog to make some necessary changes….

This graph on the right should illustrate what happened when I kicked off the Add to Cloud task and when it ended, it crushed the storage kicking the latency to ludicrious levels. So the most important thing when running a lab was to keep Phillip away from the keyboard. You can thank Jason Nash for that screenshot and the attached commentary.

The labs were a huge team effort, special shout out to everyone who helped us before, during and after the event, Dave Lawrence, Jason Nash, Bill Gurling, Art Harris, Tracy Wetherington, Thomas Brown, Jason Girard, Jeremy Gillow and everyone else at Varrow that helped out with the labs.

A lot of work goes into putting together the labs and all of the labs, both Varrow Hosted EUC labs as well as the VMware hosted labs were well received and attended at Madness. I hope to be a part of the lab team next year, we were already talking about ways to expand the labs next year and how to expand the products we demonstrate and more. Can’t wait until next year, see you then at Varrow Madness 2014.